Keeping track of AWS metrics across multiple accounts is an onerous task, using a python script, run as a scheduled kubernetes job makes it easy. Posting the data into elastic search makes extracting information from the data easy too!

AWS-Reader Python Script

The AWS-Reader script requests tokens from vault using valid credentials. HC-Vault accepts credentials, and sends AWS-Reader a valid token to pass to AWS-Vault. Reader now asks AWS-Vault for credentials to access AWS. AWS-Vault opens communication to AWS and requests valid credentials. AWS generates a key and sends it back to AWS-Vault, who sends it back to AWS-Reader. AWS-Reader then uses key to successfully log onto AWS. Phew, maybe a diagram would help…

The program uses multiple functions to connect to different AWS services. AWS’s boto3 module is used to create a client session for each service it goes to access. This means passing the relevant AWS credentials to the client each time. The credentials are stored in parameter variable vault_secrets to be used each time the function is called. Each service in AWS has different method parameters which are required for a session. Once the client connection to AWS is established the program calls a method to the service to get a particular set of information. In most service cases, the boto3 method would be “describe.<CATEGORY>()”.

To allow the script to run against multiple accounts, we used the argparse module:

Each function is called in turn, and once the function has successfully grabbed information using a specific boto3 method, the information is then filtered and presented in a readable format. A useful way of understanding the format and making it presentable was using the IPython to test the environment before inserting the commands into my script: AWS presents the results of method calls in JSON. I first came to understand the raw data structure, stripped back the raw data to get the information that I needed, before assigning and storing the relevant output and present it in the main code.

Build a Container

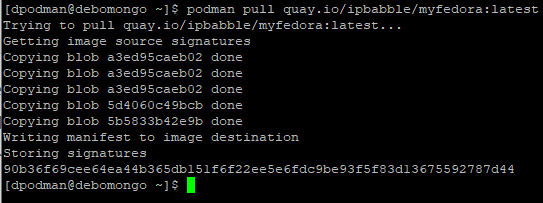

I used Podman to build base AWS-Reader image. Similar to docker it allows you to build an image that can be shared with others by pushing it to a repository. Docker requires a daemon, which in turn requires root access on the workstation to run which invites security concerns, whereas Podman is daemon-less. As there is no need for the user to alter permissions to run Podman is more user-friendly. Those familiar with Docker will also be familiar with Podman because it uses the the same commands (view example of Podman in action below). In this instance Podman accesses a cloud software repository, Artifactory to build images of files however it can just as easily pull from repositories from Docker or Quay.

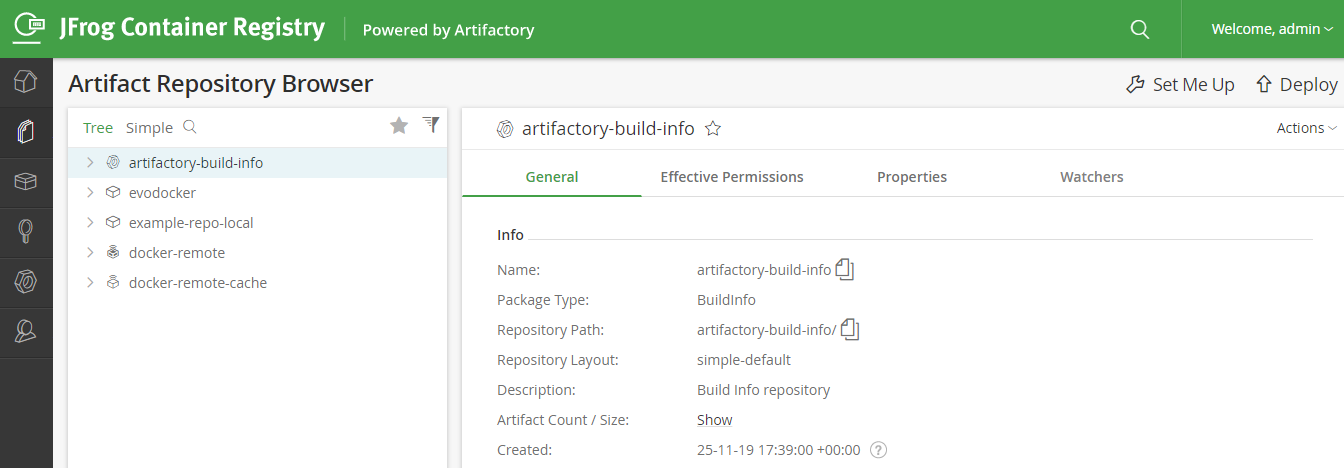

Container Repository

Artifactory Is an internal repository system that allows users to download images (exact copies of software or data) to then run on their own workstations securely. Placing AWS-Reader in an Artifactory repository allows users with credentials to the repository, access the AWS-Reader image and pull and run the code on their own workstation.

Rancher-Kubernetes

Now the program containerised, the script can be run on a Kubernetes cluster whenever it is needed. Rancher is a Kubernetes orchestrator and provides added value for CI/CD projects such as ours.

Rancher Workloads allow you to specify deployment rules for the pods that fall inside it. Each pod inside contains a YAML config file that detail the type of container that will be created in order to create the script.

CronJobs

Inside the YAML file we can specify the type of workload for the pod by selecting CronJob we ask the pod to to run the script at regular intervals. The detail for when it is executed noted in CronJob format and the script will executed automatically at the allocated time.

Executable Command

The most important Rancher UI option is the executable command to be run once the container has been created.

“aws-reader.py -a <AWS_ACCOUNT> -p” command runs the python script. Where the “AWS_ACCOUNT” stands in for the name a particular AWS account you want to read. This argument in the workload is significant, because it further automates running the script process. The alternative would be to go into running pod and manually run this command. The workload pods are deployed with the shell command for each account as argument to run python script and shuts down once finished.

Thanks for reading. If you’d like to know more, contact us at info@evolvere-tech.co.uk.